War has “always been the mother of invention,” wrote the historian A. J. P. Taylor. The First World War in particular is often taken to be a hinge in technological history — the war in which horses were widely used for the last time and weapons of mass destruction were deployed for the first time. It is not altogether wrong to think of it as the “chemists’ war” or the “engineers’ war,” as it is sometimes labeled.

However, the centenary of the Great War provides an occasion to explore the ways in which the truth is rather subtler than the conventional wisdom. With a few notable exceptions, most of the iconic technologies of the First World War were not in fact invented during or because of the war. Rather, they were modifications of existing civilian technologies developed during peacetime. Nor did the war effort engender many truly transformative technological innovations, even those for which the war is most famous. In this sense, World War I was not the mother of invention.

But the war was a turning point in another far less understood way. As we shall see, the greatest invention of World War I was not so much any particular machine but the war machine itself. In the United States especially, the war helped assemble the loosely connected elements of technology, industry, academic science, and government into the first glimmerings of what President Eisenhower would later dub the “military-industrial complex.”

In telling this story, we will focus on two integral American figures: the chemist and chemical engineer Arthur D. Little, who championed the idea of industrial research, which would come to play a key role not just in advancing corporate competitiveness but also in the new technologies of warfare; and the astronomer George Ellery Hale, who successfully campaigned to create the National Research Council, thereby elevating the authority of science as an enterprise vital to the public good. Crucial to these changes were the twin goals of integrating science into industrial research and the transformation of academic science into a professionalized discipline deserving large-scale political and financial support. To be sure, the myriad technological advances associated with the First World War are an important part of this story, even if such advances are too often thought of as taking place in the vacuum of war. It is to these wartime advances that we first turn, offering a slight but necessary corrective to the way that the interaction of war and technological progress is usually depicted.

The many technological innovations most prominently associated with World War I may be grouped into three categories: first, weapons technologies invented for, or in most cases improved upon or scaled specifically for, warfighting; second, medical innovations occasioned by the war’s traumas; and third, non-weapons technologies catalyzed by or commercialized because of the war more generally. An exhaustive catalogue of wartime technology is beyond the scope of this essay, but a brief survey will suffice to show that the war did not cause the invention, as such, of many of its best-known technologies.

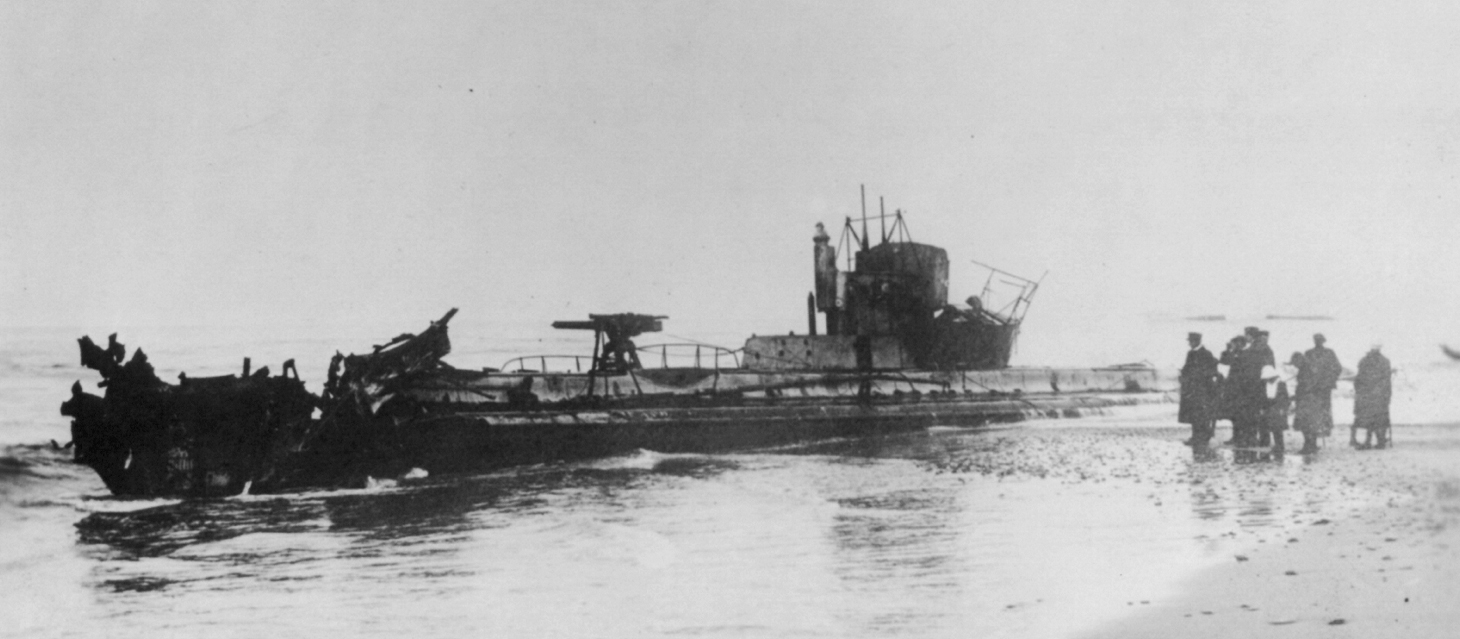

Let us start with the weapons. The sinking in May 1915 of the British ocean liner Lusitania — resulting in the deaths of more than a thousand passengers, over one hundred of them American citizens — is remembered now as the event that began the chipping away of anti-war sentiment in the United States. It also epitomized one of the war’s most famous technologies: the submarine. Although the submarine was not a German invention and even predated the war by a half-century (or more if you count prototypes and rare curiosities), it is one of the technologies most closely identified with the war. Early Allied attempts to overcome Germany’s advantage in underwater operations were reminiscent of old military concepts: ramming, for example, was considered a tactic of choice early on. The German submarine stranglehold was not broken until the adoption of a technology invented earlier for civilian use: the hydrophone. When combined with the development of the hydrostatically triggered depth charge, this allowed the Allies to detect, locate, and destroy submerged German submarines.

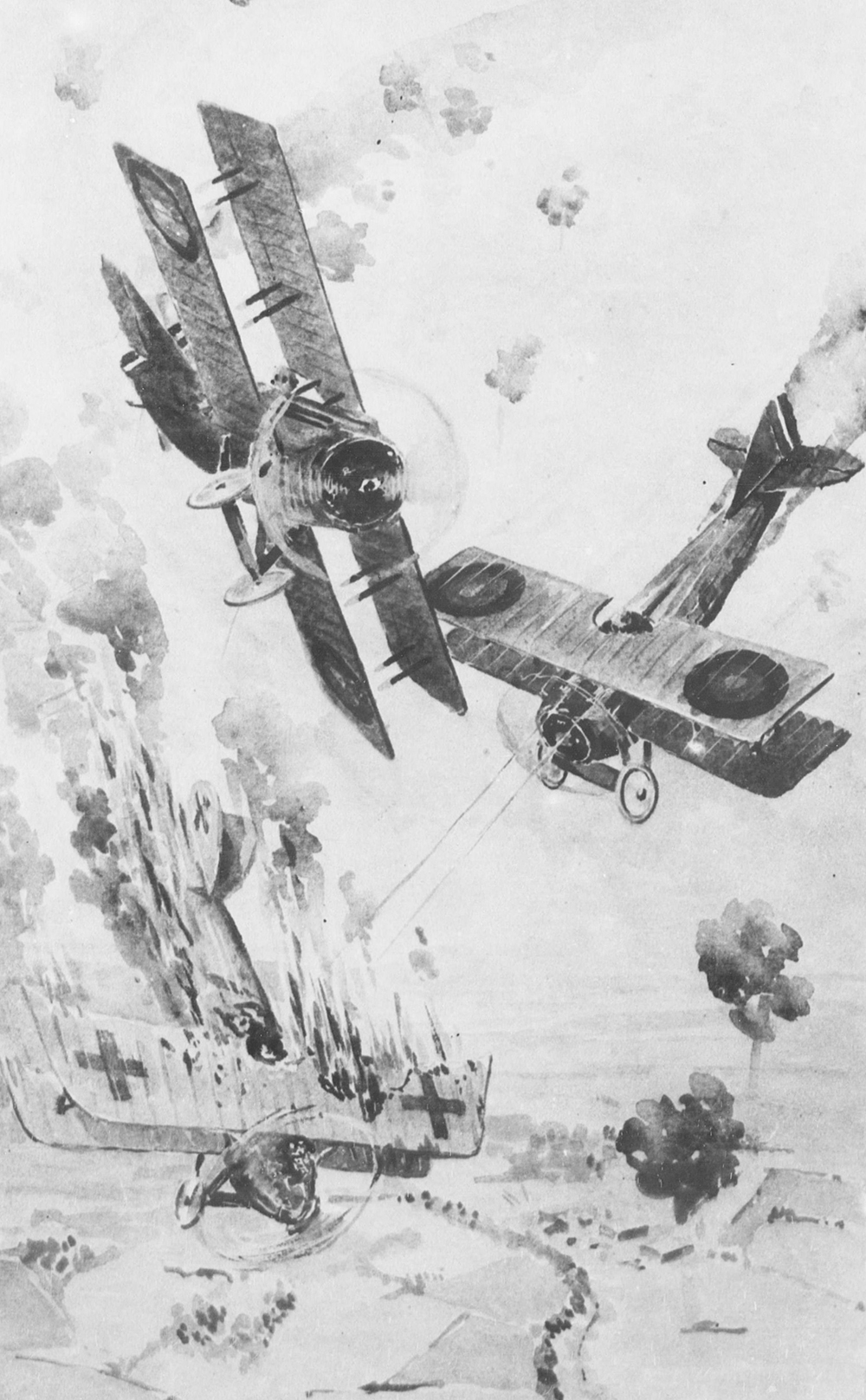

Like the submarine, the machine gun had existed for decades before the war. Though machine guns had evolved considerably, more important was their widespread availability, which contributed to the stalemate of the trench warfare on the Western Front. Machine guns were also used in novel ways. For example, they could be mounted on airplanes thanks to a clever innovation: the interrupter gear, which synchronized the gun’s firing with the plane’s propellers so that the bullets would not hit the propeller’s blades.

The First World War has sometimes been called an “artillery war,” for instance by military historian John Terraine in his 1982 book White Heat. According to some estimates, shellfire caused 60 to 70 percent of all battle casualties. But while there were many refinements in artillery and shell-related technologies during this period, the shrapnel shell, which contributed terrifyingly to the grisly brutality of the war, was originally developed in the 1780s by Henry Shrapnel, the Briton for whom it is named.

The fragmentation hand grenade, the tank, and chemical weapons are better candidates for genuine inventions of the Great War. The former, the “Mills bomb,” as it came to be called in Britain, was introduced in 1915 by Sir William Mills (whom, on a personal note, family lore claims as a distant relation to the authors). However, his design improved on an earlier model developed by Belgian Army captain Léon Roland. Meanwhile, the tank combined the earlier inventions of high-strength steel and the internal combustion engine, all in service of overcoming barbed wire, trenches, and machine guns. But while the tank did make its battlefield debut during the conflict, it was not produced or deployed in large numbers and remained of marginal tactical effectiveness; its true potential would not be realized until the Second World War.

The Great War is often referred to as the “chemists’ war,” in a nod to the horrific legacy of chemical weapons. The first large-scale and widely reported chemical attack was on April 22, 1915, when German forces released chlorine gas in Ypres, Belgium. Allied troops engulfed by the cloud of poison found themselves “drowning on dry land as their lungs filled with fluid,” according to weapons expert Jonathan B. Tucker. Their skin and eyes seared by the chemical, they “gasped painfully for air and coughed up a greenish froth flecked with blood.” An eyewitness quoted in the New York Times described the effect on soldiers as “without doubt the most awful form of scientific torture.” The use of gases escalated from there, with both sides deploying chemical weapons until the war’s end. Chemists helped develop novel types of poison gas — from “mustard gas” (used by the Germans) to Lewisite (invented by the Americans but never used during the war). Through the invention of such terrible weapons and the development of the associated wartime research, chemists certainly played an infamous role in the war effort. But beyond adapting chemistry as a tool for killing, the war saw few, if any, major inventions in chemical engineering. The famous Haber-Bosch process, for example, a method for synthesizing ammonia from hydrogen and nitrogen, which Germany used in manufacturing its explosives, was developed for industrial purposes several years before the war began.

It is in the next two categories — medicine and technologies other than weapons developed or improved for the needs of the war and its soldiers — that we find an array of advances the impact of which would be felt most in the lives of ordinary citizens long after war.

During the war, medical care followed patterns of innovation similar to those of weapons technology, with wartime needs making it vital to extend and improve upon existing tools and practices. For example, though X-ray machines date to the late nineteenth century, the first mobile X-ray machines were deployed during World War I (Marie Curie herself helped equip the vehicles). Similarly, the war saw the widespread use of the cellulose bandage (which had been previously invented in the civilian sector and which collaterally led to the sanitary napkin), motorized ambulances (pioneering the concept of rapid evacuation to dramatically improve speed to treatment), and blood transfusions. In the nineteenth century, blood transfusions were rare and dangerous. But a dozen years before the war, the mystery of why some transfusions were successful while others were deadly was solved: there are a few different blood types, some of which are incompatible. While such knowledge was not new in 1914, World War I was the first time it was widely put to use, with tens of thousands of wounded men receiving transfusions. The war also saw advances in blood preservation and, in an attempt to meet the need for donations, the creation of what are often considered the world’s first blood banks.

There were, however, some notable medical advances born out of the war. In fact, medicine arguably had a higher proportion of innovations resulting directly from war, partly because of the introduction of deadly modern weapons. The traumatized behavior of many soldiers returning from the front lines — tremors, hypersensitivity, confusion, lassitude, a “thousand-yard stare” — became known as “shell shock,” what today’s medical researchers now call combat stress reaction. As Peter Leese explains in his 2002 book Shell Shock, while early medical treatments for shell shock were of limited effectiveness, post-war press attention to the problem led to major advances in the 1920s and 1930s.

Similarly, modern plastic surgery was pioneered because of the injuries from high-explosive fragmentation ordinance, as was the splinting of broken femurs. The latter resulted in the associated fatality rate dropping from about 80 to 20 percent. (Many of these and other developments are movingly chronicled in Emily Mayhew’s recent book Wounded.) Even Sir Alexander Fleming’s invention of penicillin can be substantially linked to the Great War, though it did not come for a decade after the war’s end. Fleming credited his experience in battlefield hospitals to his later research. Indeed, the war functioned like a vast laboratory for the implementation and testing of not only organizational and administrative techniques for medical treatment, but also the various contagion theories of disease that had been hotly debated in the medical community during the decades preceding the war.

The third category of innovations associated with the Great War — non-weapon, non-medical technologies — exhibits most explicitly the pattern of innovation we have seen in the first two categories. Though the roots of these discoveries and inventions mostly precede the war, the needs of warfare made advancement or modification urgent, while the magnitude of demand stimulated industrial-scale production.

For example, early versions of hydrophones were originally used for navigation, and then, in the wake of the sinking of Titanic in 1912, were adapted for locating icebergs. But the demands of submarine warfare required that this technology be militarized and commercialized. Similarly, the Englishman Harry Brearley invented “rustless” or “stainless” steel in 1913, just before the war, making possible modern aircraft engines, as well as better dining utensils and medical instruments. Other examples include the zipper (promptly adopted by producers of aviator suits and sleeping bags), secure optical communications (the heliograph), daylight savings time (to save energy), the tea bag, the first widespread and institutional distribution of condoms, and the wristwatch (which became common issue for soldiers and which many veterans continued to wear after the war).

While the First World War began the full military integration of real-time communications, the telegraph had been used in a number of wars since the 1850s. Wireless telegraphy and the radio antedated the war by more than a decade, but during the war years the necessary technology was made more portable and thus suitable for use in the field and at sea. The war also led to the first rudimentary advances in radio communication between airplanes and the ground, field telephone systems allowing communications with the front lines, and in some small ways hinted at the possible use of radio for broadcasting.

Also new with the First World War was the use of personal cameras on the front lines in the hands of individual soldiers and civilians, allowing for a higher volume of candid photographs than had been possible in earlier wars and making war photography a new feature of newspapers and propaganda.

Airplanes also typify this burgeoning industrial-military model. The aircraft was famously invented more than a decade before the First World War by two civilians, the Wright brothers. But the militarization of airplanes led to some of the war’s most dramatic and memorable episodes, especially with the high drama of aerial dogfights; Germany’s Red Baron remains a legend to this day. Still, although all the world’s militaries would eventually add air forces to their armies and navies, during the First World War air power was not yet strategically essential. (Interestingly, a pilotless drone took flight and landed successfully for the first time on March 6, 1918, a U.S. Navy project to be abandoned a few years later, awaiting the emergence of the necessary associated technologies.)

Crucially, “pure science” and academic scientists played at most minor roles in the development of most of the aforementioned technologies. The technological innovations that would be put to use for the military were largely due not to science but rather to industry and industrial engineering, which is why the war is perhaps most aptly called a “war of engineers.” As English physicist and engineer John Ambrose Fleming said in a 1915 lecture, “It is beyond any doubt that this war is a war of engineers and chemists quite as much as of soldiers,” and “to win this war we have to achieve engineering feats.” And a 1918 article in The Scientific Monthly explained that “the existing war is essentially a war of engineers; for it is they who are manufacturing the guns, ammunition, vessels, motors, and the other paraphernalia requisite for carrying on the struggle, and who are attending to the transportation of men, munitions, food, and all other supplies by both land and sea, besides doing their fair share of the fighting.”

However, over the course of the war scientists came to play an increasingly essential role in industrial research, directing and advising military leaders, politicians, industrialists, and technicians, thereby creating the modern model of corporate and government “research and development.”

Throughout the nineteenth century, American businesses had ably harnessed the power of the technologies of the Industrial Revolution, pioneering and mastering the art of mass production. From the early mill towns on the East Coast to Cyrus McCormick’s reaper factory in Chicago to the giant Singer plants making sewing machines, vast booming sectors of the U.S. economy took advantage of mechanized mass production, powered at first by water, then by combustion engines. By the time war broke out in Europe, Taylorism had been boosting factory efficiency across the country and Henry Ford had already sold hundreds of thousands of his mass-produced Model Ts. All told, America’s manufacturing output had risen from 7.2 percent of the world total in 1860 to 32.0 percent in 1913.

Against the backdrop of such industrial success, it must have sounded strange when, in 1913, Arthur D. Little argued for the necessity of a new industrial model. At a meeting of the American Chemical Society in Rochester, New York, Little, the society’s president, concluded his presidential address with this pronouncement:

Modern progress can no longer depend upon accidental discoveries. Each advance in industrial science must be studied, organized and fought like a military campaign.

What Little rightly perceived was that the Western world was becoming engulfed by a new wave of industrialization — one less dependent on the mechanical arts of old than on the new sciences of chemistry and electricity. Chemistry, for example, had begun to play a crucial role in the large-scale production of disinfectants, dyes, fertilizers, plastics, and photography, while electricity was indispensable for telephony, illumination, and the radio.

Little argued that, because the emerging industries and products were the fruits of new science, rather than disparate mechanical inventions, it was necessary for American industries to adopt a new model based on a dedicated internal scientific research department. Today it is hard to imagine any large business enterprise — whether McDonalds, Wal-Mart, General Motors, or Google — lacking corporate research and development, so integral is it to our conception of technological advancement and competitive advantage. But while technology was widely recognized as essential to America’s economic growth when Little delivered his remarks, the idea that scientists could put their disciplines to work in direct service to industry was still relatively novel. Indeed, with few exceptions, scientists in late-nineteenth- and early-twentieth-century America were to be found in academia, not in industry and rarely in the employ of the military.

Few people were better positioned to see the need for this new model than the Bostonian Little (1863–1935). In the 1880s, after studying chemistry at M.I.T., Little worked in the New England papermaking industry. There he learned to apply his scientific knowledge in an industrial setting. He then founded one of the first independent commercial research laboratories and became a pioneer in the new field of chemical engineering. (An eponymous company that he started, dedicated to performing analytical studies for other businesses, still survives today as a management consulting firm.)

In his speech, Little pointed to a few exemplars of the new industrial research model: Thomas Edison’s General Electric labs, as well as those of AT&T, Westinghouse Electric, DuPont, Eastman-Kodak, and several prominent German firms. Little himself had even been commissioned to create a research department for General Motors. Nevertheless, such companies remained exceptions in the early part of the century; the domains of academic science and industrial science had yet to be fully integrated, with scientists and engineers rarely working hand-in-hand. As Little put it, “Whatever may be said … of industrial research in America at this time is said of a babe still in the cradle.” But industrial research had already had some great successes — the babe had, “like the infant Hercules, already destroyed its serpents” — hinting at a glorious future.

As historian Paul A. C. Koistinen, author of a five-volume series on the political economy of American warfare, has shown, World War I was the first large-scale conflict for which total mobilization — political cooperation with industry and research for the purposes of the war effort — became possible, even necessary, with public and private interests “inextricably combined.” And it was, too, a war of factories and farms aiming to out-produce the enemy. Before entering the war, the United States supplied the Allies with food, materiel, and equipment, and then, after 1917, overwhelmed the Central Powers with the sheer quantity of machinery it put in play. Such quantity of force left Germany at a severe disadvantage in the later stages of the war and was a significant factor in its demoralization and subsequent surrender in August 1918.

Industrial means of production thus became means of warfare; moreover, thanks to the emergence of the commercial laboratory, industrial research became the means of innovation, directing businesses to respond to the new technological needs of the military. In time, American industry would see the full imprint of Little’s concept of research, so that when the Second World War broke out, the American military and government were able not only to leverage an existing and enormous infrastructure, but also to co-opt the emergence of an entirely new and powerful complex of directed industrial, technological, and increasingly scientific research.

However, while in the years leading up to the war, industry had begun to harness scientific research for commerce and warfare, the potential usefulness of science for the public good was still widely underappreciated. This, too, was about to change.

In the aftermath of Lusitania’s May 1915 sinking, the U.S. Secretary of the Navy turned to famed American inventor and businessman Thomas Edison for help in creating a coalition of the nation’s “keenest and most inventive minds,” which together with Edison’s own “wonderful brain to aid us” would find a new technological means of combating the submarine. Within three months, Edison had assembled the Naval Consulting Board, a panel of distinguished inventors, engineers, and scientists.

From today’s perspective, there is nothing unusual about convening governmental advisory bodies composed of those with scientific and technical expertise. In fact, the prestige scientists now enjoy in the United States — survey data show that Americans have more confidence in scientists than in judges, teachers, religious leaders, bankers, media personalities, business leaders, or politicians — derives in large part from the sense that they are preeminent problem-solvers. The use of scientific research by every department of the federal government has become a commonplace, if not always uncontroversial, feature of modern political life.

But in 1915, it was still rare for the government to request outside technical expertise in this way. Although the National Academy of Sciences had been founded in 1863 with the aim of offering scientific advice to the nation’s leaders, its services had been requested a mere fifty-one times in fifty-two years. In general, to be a professional scientist in the United States in the nineteenth and early twentieth centuries was not to be a public expert in our modern sense — someone called upon for political or legal testimony, for technical advice or services. Rather, most scientists remained within the walls of the academy. Some even expressed their reluctance in seeking after profits and advising politicians if it meant abandoning their noble vocation of searching after truth and teaching students.

One figure who found this state of affairs unacceptable was the American astronomer George Ellery Hale (1868 – 1938), who would be pivotal in the outward turn of American science — in its professionalization and its rising public role.

Born into a wealthy Chicago family, Hale, while not ostentatious, was atypical among American scientists for his capacity to move comfortably from scholarly to patrician and industrial circles. He founded and edited The Astrophysical Journal, and founded and fundraised for the Yerkes Observatory in Wisconsin and the Mount Wilson and Palomar Observatories in California. Hale was also an inventor: he was elected to the Royal Astronomical Society at the age of twenty-two for creating a photographic device useful in astronomical observations.

In 1902, when he was in his mid-thirties, Hale was elected to the National Academy. As the historian of science Daniel J. Kevles recounts, Hale became “an outright activist” in the Academy’s affairs. He served as its foreign secretary and believed that it was the ideal vehicle for the promotion of science in the United States. His timing could not have been better; as Kevles explains, scientific journals and funding were rapidly expanding during the first years of the twentieth century, and membership in the American Association for the Advancement of Science rose fourfold in the fifteen years between 1900 and 1915, from 1,920 to 8,325.

Starting in 1913, Hale wrote a series of articles for the journal Science laying out his vision for the Academy; these would form the basis of his short 1915 book National Academies and the Progress of Research. Among his important recommendations was the creation of a new journal — what would become the Proceedings of the National Academy of Sciences, now one of the world’s most important scientific publications. Hale also argued that the chief purpose of the Academy should be to “uphold the dignity and importance of scientific research, and to diffuse throughout the nation a true appreciation” of the benefits of science, but that it must also “enjoy the active cooperation of the leaders of the state.”

But the war would become the most important catalyst for major change at the Academy. “In the middle of the Lusitania crisis,” Kevles writes, Hale suggested to colleagues that the Academy “offer its services to President Woodrow Wilson. The fading of the crisis made the proposal untimely.” Hale then confessed to a friend that he found it “depressing” that Thomas Edison had convened the Naval Consulting Board with no formal role for the Academy. But in 1916, Hale tried again: as the crisis was escalating once more, Hale joined a delegation from the Academy that visited the White House to present President Wilson with an offer of assistance. The president accepted the offer, and by June 1916, a new arm of the Academy had been established — the National Research Council. Its purpose, Hale wrote in a preliminary report, was “to bring into co-operation existing governmental, education, industrial, and other research organizations with the object of encouraging the investigation of natural phenomena, the increased use of scientific research in the development of American industries, the employment of scientific methods in strengthening the national defense, and such other applications of science as will promote the national security and welfare.”

During the remainder of the war, the Council, with Hale as its first chairman, was involved in a wide range of activities, assisting both government agencies and private bodies. The Council provided topographical information to the Army War College, helped the Army Signal Corps better its capacity for sound ranging (a technique for determining the location of enemy artillery pieces), advised the Navy on improving its range finders, worked with manufacturers to help determine where botanical raw materials necessary for production could be found, and much more.

The work of the Council provided Hale with the evidence he needed to make a convincing case for the importance of scientific research both to national security and to the post-war economy. As Kevles notes, Hale wrote to President Wilson later in the war to warn that America could not “compete successfully with Germany, in war or peace, unless we utilize science to the full for military and industrial purposes.” This was no longer a mere platitude; as evidence of the fecundity and necessity of scientific research he could point to the work of scientists on the Council who were developing weapons, tools, and techniques for all fronts, from the submarine to chemical warfare.

Hale’s vision for transforming the National Academy of Sciences must be understood in the context of broader changes then underway. The very idea of science had been evolving in recent decades, shedding many of the vestiges of its earlier classical conception. The terms “natural philosophy” and “natural philosopher” had fallen into disuse, and even the phrase “men of science” — redolent of an era of amateurs with wealth and leisure — was being supplanted by the much more distinct term “scientists.” Science was becoming more public and more professional.

It was also becoming more practical. Although we should be careful of anachronism when using such terms as “pure” and “applied” science during the early twentieth century — since the meanings of those terms changed in important ways during subsequent decades — the notion of a division between that research which adds to our store of knowledge and that which exploits knowledge for practical benefit was already familiar. In the years leading up to America’s entry into the First World War, on the pages of prominent science publications pure science was touted as necessary, not only in itself, but especially for the practical, specifically technological, benefits it made possible. Thus, for example, a Scientific American article from 1911 explained how according to the view of most thoughtful people “the seemingly most abstruse scientific investigations have again and again grown to unexpected and most important useful application.” Some leading American scientists, such as anatomist C. Sedgwick Minot in a 1911 Nature article, argued that practical results depended on the theoretical knowledge arising from pure research, that the power we possess over nature is a result of scientists laboring “with a pure devotion uncontaminated by any worship of usefulness.”

We can see in the arguments of these thinkers and many of their contemporaries the emergence of what today’s historians and philosophers of science call the “linear model” for understanding the relationship of science to technology. On this model, which holds sway in today’s public discussion of science (despite being rejected by nearly all science policy scholars), technological innovation begins with scientific theories and discoveries, which are then “applied” to real-world problems, thereby leading to the development of new technologies. Even the “pure science” that had once been thought to be carried out with no particular practical aims in mind was now thought to be the foundation or basis of the rest of scientific research and technological development.

At the same time that science was being reconceived along more practical lines, it was also being recast as essential to the public welfare — in keeping, at least to some extent, with the Progressivism of the day. For example, the botanist John Merle Coulter, writing in a 1910 article critical of what he called “practical science,” explained that “a new spirit is taking possession of the public and it has invaded the universities … the spirit of mutual service” and that the university was “no longer conceived of as scholastic cloister, a refuge for the intellectually impractical; but as an organization whose mission is to serve society in the largest possible way.” During the years just before and after the war, scientific journals were rife with articles bearing such titles as “Science and Public Service,” “Pure Science and the Public Weal,” and “The Value of Science” wherein leading scientists nudged one another to take a more active social role. Many leading scientists and advocates of science during this period were, if not active in Progressive political organizations, at least animated by the conviction that reason could and ought to bring about the betterment of society as a whole.

Though Hale was not himself a Progressive, his pre-war ambitions for science and the National Academy certainly fit into this larger progressivist context. Thus, for example, in 1913, the Academy established a “Medal for Eminence in the Application of Science to the Public Welfare.” But it was not until the war began that this notion of productive scientific research gained a political and financial foothold. Hale’s success in establishing the National Research Council crystallized, if it did not create, the conviction that research was indispensable to national security and victory on the battlefield.

Having succeeded in showing that scientific research was indispensable for military preparedness and national defense, Hale began immediately pushing for a peacetime scientific structure, arguing that research could advance the national interest even beyond success in war. As things stood, the National Research Council was little more than a special project of the Academy, convened at the request of the president. Without legislation or at least an executive order establishing the Council, there was no guarantee that it would continue to play the role it had in organizing research during the war. Though the Academy could preserve the Council as an organization, the hard-won coalition with the government and military was dependent on the whims of the president. Choosing to bypass Congress — as Daniel Kevles notes, Hale was “a starched-collar Republican” with a rather dim view of the intellectual standing of the congressional Democrats — Hale decided to appeal once more to President Wilson directly, drafting an executive order through which the president could permanently establish the Council.

In the proposal for Wilson, Hale outlined eight long-term aims and duties for the National Research Council, only three of which explicitly pertained to national defense or the military. But as Kevles notes, the president’s advisors thought that Hale’s plan for the Council was legally dubious and a potential political liability: the drafted executive order suggested that the Council, a private body, would help direct government work in science. After some discussions and revisions, it was pared back to a version Hale found acceptable and Wilson could sign, which he did on May 11, 1918. In its final form, the order praised the Council for its work “in organizing research, in furthering science, and in securing cooperation of government and non-government agencies in the solution of their problems.” It gave the government’s imprimatur to the Council’s work of stimulating research, promoting cooperation among researchers, and properly disseminating scientific and technical information.

“Within a year,” Kevles writes, the National Research Council “had its principal financial gifts in hand,” with support from the likes of the Carnegie Cooperation, Henry Ford, and the Rockefeller Foundation. The Council — and with it large-scale scientific research — had the funding, the institutional position, and the governmental mandate that would allow it to outlive the Great War.

Though by no means devoid of conflict or economic turmoil, the half-century preceding the First World War saw rapid economic growth and intensifying interconnectivity in the United States and much of Europe, with a dazzling array of new goods, services, and comforts, as well as new lines of transportation, communication, and commerce. But any hopes for a lasting era of global peace and prosperity were lost in the blood and smoke of the war. The war’s major operations were some of the deadliest in history, with tens of thousands of soldiers sometimes dying on a single day. The sites of some of the battles still resonate in our cultural memory: Verdun, the Somme, the Marne, Ypres. Given the gruesomeness and the terrible scale of the carnage — with a total of ten million soldiers and perhaps seven million civilians dead on all sides — is it any surprise that British soldier and poet Wilfred Owen labeled Horace’s famous line dulce et decorum est pro patria mori (“sweet and fitting is it to die for one’s country”) that “old Lie”?

The war is sometimes credited with disabusing the West of its technological optimism. It “undermined the more naïve expectations of the Europeans and Americans about the inevitably uplifting effects of scientific and technological achievement,” writes the historian Robert Friedel in A Culture of Improvement (2007). The war “confirmed that technology itself indeed appeared to have no limits,” Friedel continues, even as it resulted in “disillusionment with the rosy Victorian promise of moral improvement and uplift through technological, economic, and scientific progress.”

There is some truth to this diagnosis. For example, the interwar years did see a profusion of reflections on the destructive and alienating effects of technology, from modernist literature and the emerging genre of science fiction, to philosophical and cultural commentary. Oswald Spengler’s reactionary two-volume The Decline of the West was published shortly after the war. Lewis Mumford’s seminal book Technics and Civilization was published in 1934; many of Walter Benjamin’s most influential writings on technology and modernity appeared during this time; the Marxist criticisms voiced by György Lukács and the Frankfurt School were also coming into their own. That there was a general cultural disillusionment with the notion of progress is evidenced by the rise of anti-Enlightenment thinkers and political movements, from Martin Heidegger’s existentialist phenomenology to fascism.

But given this disillusionment with progress generally, how did science’s reputation as an agent of progress fare? In his 1971 masterwork The Physicists, Daniel Kevles points to a fascinating 1916 exchange in which a Harvard classicist, Roy K. Hack, wrote in the Atlantic Monthly that Germany had “proclaimed the holy war in the name of science” but that America, too, was “infected with the same maniacal worship of science.”

And just in so far as we Americans, like the Germans, have sinned the sins of greed, just in so far as we too have bowed down before deified science, just in so far as we too have suffered the tools of man to dominate and enslave the spirit of man, so far are the sins of the Germans our own, so far we render ourselves their accomplices.

The journalist Walter Lippmann responded in The New Republic, calling Hack’s charges “hysterical pedantry” and dismissing the “simple formula” underlying Hack’s view of science.

The formula may be compressed. The Germans are science. Science is the Zeppelin. The Zeppelin is murder. Therefore, science is hell. But is it?….

The political ideas which generated this war, the theories of national interest, prestige, honor, patriotism are not the products of science, but territory which science has still to conquer.

Lippmann’s sentiments — that not an excess but rather a lack of science was responsible for war — were echoed by many in subsequent years. Especially noteworthy were the myriad Leftist schools of thought, from progressivism and socialism to Marxism and communism, many of which gave science and scientific methods pride of place. British scientist and public intellectual J. D. Bernal argued for social transformation at the hands of science in such books as The World, the Flesh, and the Devil (1929) and The Social Function of Science (1939), while American economist and sociologist Thorstein Veblen, with such books as The Engineers and the Price System (1921), suggested that a new class of scientific and technical experts be given administrative and even political power over commerce and government.

For those who adhered to less radical views, science could still be held up as a praiseworthy human endeavor; it could explain the nature of the universe and improve standards of living. All in all, as British historian of science and technology D. S. L. Cardwell concludes in his 1975 article “Science and World War I”:

science may have been one of the few institutions to emerge from the war comparatively untarnished and with enhanced prestige. Much else was discredited: national politics, traditional forms of education, organized religion, the economic order. Science, on the other hand, showed that men could achieve things that were still worthwhile.

Science was predominantly seen to be at the same time responsible for victory and innocent of bloodshed. As Cardwell points out, “Even on the battlefield the record of science was, with the exception of the use of poison gas, a good one. None of the main weapons could be ascribed to recent scientific research” while many medical advances could be. Paradoxically, then, science — though it had attained its newfound status partly by integrating itself into large-scale industrial and technological research projects, many specifically for military purposes — remained insulated from the general disenchantment associated with the war. To the extent that science was associated with the war, it was credited with victory. In Great Britain in particular, where wartime scientists had successfully campaigned against the “neglect of science,” the nation was said to owe a “debt to science,” as a 1919 article in Nature argued.

A possible explanation for this seeming paradox — that science achieved such an exalted status during the war but remained insulated from the general disillusionment that war brought — is that science, despite the efforts of Hale and his comrades, was at that time still far more removed from technology, industry, and government than it is today. There was not yet any project or entity of the magnitude that we have since come to associate with federally funded science and technology, such as the Manhattan Project, the Los Alamos National Laboratory, the National Institutes of Health, or NASA. In fact, Hale’s National Research Council at the time still relied on private funds, and many members of the National Academy of Sciences were concerned that Hale’s efforts might diminish the independence from political interests that this body — and indeed science itself — enjoyed, both in practice and in the eyes of the public. Hale, while recognizing the need for federal patronage, feared government interference with science. His vision, however pragmatic, was not a technocratic one. Perhaps optimism in science remained intact and even grew after the war because most people did not yet perceive science to be an instrument of the government, and thus of war, and thus the source of new tools for dealing death.

It would be an error of prolepsis to project back onto World War I and the years leading up to it our more recent notion of the military-industrial complex. But several trends that were already underway when the war began — including industrialization, the commercialization of research laboratories, the professionalization of science, and the belief that science ought to serve some public purpose — came together as never before to meet the urgent demands of the belligerent nations. The American economy became, for the first time, a modern war machine — a science-and-technology-centric industrial model that would come into full force during World War II. During the Cold War, the relationship between science, technology, and government continued to broaden and deepen, including the establishment of a system in which the federal government became a mainstay of funding for research and development. It remains to be seen whether the technological and geopolitical developments of our own era — from new kinds of weapons and warfare to new kinds of institutions and international norms — will tighten or fracture the linkage between science, technology, industry, and the national interest.

Exhausted by science and tech debates that go nowhere?