In my last post on

ironic transhumanist tech failures, there was one great example I forgot to mention. If you subscribe to the RSS feed for the

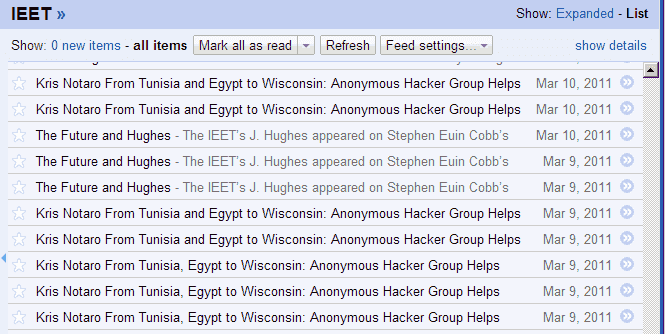

IEET blog, you may have noticed that most of their posts go up on the feed multiple times: my best guess is that, due to careless coding in their system (or a bad design idea that was never corrected), a post goes up as new on the feed every time it’s even modified. For example, here’s what the feed’s list of posts from early March looks like:

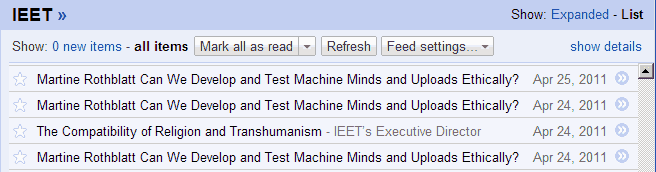

Ouch — kind of embarrassing. Every project has technical difficulties, of course, but — well, here’s another example:

Question: can we develop and test machine minds and uploads ethically? Well, one way to get at that question is to ask what it might say about technical fallibility when such a prominent transhumanist advocacy organization has not yet figured out how to eliminate inadvertent duplicates on its RSS feed, and how such an error might play out when, say, uploading a mind, where the technical challenges are a bit more substantial, and the consequences of accidentally creating copies a bit more tricky.

Don’t get me wrong — we all know that the IEET is all about being Very Serious and Handling the Future Responsibly. I mean, look, they’re taking the proper precaution of thinking through the ethics of mind uploading long before that’s even possible! Let’s have a look at that post:

Sometimes people complain that they “did not ask to be born.” Yet, nobody has an ethical right to decide whether or not to be born, as that would be temporally illogical. The solution to this conundrum is for someone else to consent on behalf of the newborn, whether this is done implicitly via biological parenting, or explicitly via an ethics committee.

Probably the most famous example of the “complaint” Ms. Rothblatt alludes to comes from Kurt Vonnegut’s final novel,

Timequake, in which he depicts Hitler uttering the words, “I never asked to be born in the first place,” before shooting himself in the head. It doesn’t seem that either fictional-Hitler’s or real-Vonnegut’s complaint was answered satisfactorily by their parents’ “implicit biological consent” to their existence. And somehow it’s hard to imagine that either man would have been satisfied if an ethics committee had rendered the judgment instead.

Could Vonnegut (through Hitler) be showing us something too dark to see by looking directly in its face? Might these be questions for which we are rightly unable to offer easy answers? Is it possible that those crutches of liberal bioethics, autonomy and consent, are woefully inadequate to bear the weight of such fundamental questions? (Might it be absurd, for example, to think that one can write a loophole to the “temporal illogicality” of consenting to one’s own existence by forming a committee?) Apparently not: Rothblatt concludes that “I think practically speaking the benefits of having a mindclone will be so enticing that any ethical dilemma will find a resolution” and “Ultimately … the seeming catch-22 of how does a consciousness consent to its own creation can be solved.” Problem solved!

—-

In a similar vein, in response to my shameless opportunism in my last post in pointing out the pesky ways that technical reality undermines technological fantasy,

Michael Anissimov commented:

In my writings, I always stress that technology fails, and that there are great risks ahead as a result of that. Only transhumanism calls attention to the riskiest technologies whose failure could even mean our extinction.

True enough. Of course, only transhumanism so gleefully advocates the technologies that could mean our extinction in the first place… but it’s cool: after his site got infested by malware for a few days, Anissimov got Very Serious, decided to Think About the Future Responsibly, and, in a post called “Security is Paramount,”

figured things out:

For billions of years on this planet, there were no rules. In many places there still are not. A wolf can dine on the entrails of a living doe he has brought down, and no one can stop him. In some species, rape is a more common variety of impregnation than consensual sex…. This modern era, with its relative orderliness and safety, at least in the West, is an aberration. A bizarre phenomenon, rarely before witnessed in our solar system since its creation…. Reflecting back on this century, if we survive, we will care less about the fun we had, and more about the things we did to ensure that the most important transition in history went well for the weaker ambient entities involved in it. The last century didn’t go too well for the weak — just ask the victims of Hitler and Stalin. Hitler and Stalin were just men, goofballs and amateurs in comparison to the new forms of intelligence, charisma, and insight that cognitive technologies will enable.

Hey, did you know nature is bad and people can be pretty bad too? Getting your blog knocked offline for a few days can inspire some pretty cosmic navel-gazing. (As for the last part, though, it shouldn’t be a worry, as Hitler+ and Stalin+ will have had ethics committees who consented to their existences, and all their existential issues thereby solved.)

—-

The funny thing about apocalyptic warnings like Anissimov’s is that they don’t seem to do a mite to slow down transhumanists’ enthusiasm for new technologies. Notably, despite his Serious warnings, Anissimov doesn’t even consider the possibility that the whole project might be ill-begotten. In fact, despite implicitly setting himself outside and above them, Anissimov is really one of the transhumanists he describes in the same post, who “see nanotechnology, life extension, and AI as a form of candy, and reach for them longingly.” This is because, for all his lofty rhetoric of caution, he is still fundamentally credulous when it comes to the promise of transformative new technologies.

Take geoengineering: in Anissimov’s first post on the subject, he cheered the idea of intentionally warming the globe for certain ostensible benefits. Shortly later, he

deleted the post “because of substantial uncertainty on the transaction costs and the possibility of catastrophic global warming through methane clathrate release.” It took someone pointing to a specific, known vector of possible disaster for him to reconsider; otherwise, only a few minutes’ thought given to what would be the most massive engineering project in human history was sufficient to declare it just dandy.

Of course, in real life, unlike in blogging, you can’t just delete your mistakes — say, releasing huge amounts of chemicals into the atmosphere that turn out to be harmful (as we’re learning today when it comes to carbon emissions). Nor did it occur to Anissimov that the one area on which he will readily admit concern about the potential downsides of future technologies — security — might also be an issue when it comes to granting the power to intentionally alter the earth’s climate to whoever has the means (whether they’re “friendly” or not).

—-

One could go on at great length about the unanticipated consequences of transhumanism-friendly technologies, or the unseriousness of most pro-transhumanist ethical inquiries into those technologies. These points are obvious enough.

What is more difficult to see is that Michael Anissimov, Martine Rothblatt, and all of the other writers who proclaim themselves the “serious,” “responsible,” and “precautious” wing of the transhumanist party — including Eliezer Yudkowsky, Nick Bostrom, and Ray Kurzweil, among others — in fact function as a sort of disinformation campaign on behalf of transhumanists. They toss out facile work that calls itself serious and responsible, capable of grasping and dealing with the challenges ahead, when it could hardly be any less so — but all that matters is that someone says they’re doing it.

Point out to a transhumanist that they are as a rule uninterested in deeply and seriously engaging with the ramifications of the technologies they propose, or suggest that the whole project is more unfathomably reckless than any ever conceived, and they can say, “but look, we are thinking about it, we’re paying our dues to caution — don’t worry, we’ve got people on it!” And with their consciences salved, they can go comfortably back to salivating over the future.

And the alternative is to not think or write about the ethical issues at all? Or because it is "the world's most dangerous idea" to just wish it will go away by not addressing its ethical aspects? The premise of this post is that there is no ethical discussion that could support the introduction in any way of transhumanism because it is "unfathomably reckless." But hey, bubba, the show must go on. It always, always does. The question is, do you want the show to go on with some rules, and hope for the best, or just stick your head in the sand and pray. Ethical rules, albeit imperfect and wobbily argued, will have a higher probability of a beneficent outcome than no rules at all. You call this project "unfathomable." OK, but then its not fair to blame ethicists for their shortcomings in trying to fathom it.